Camera Match

-

I've tried a number of times to camera match this image and wondered if you could try so I can analyze how you did it? )

I had the match solved but I often found the focal length would vary. I also couldn't get new objects to "sit" on the floor by default. Lastly, the camera would often be a little pitched or banked. In 3DSMax you could set the camera to auto correct on the vertical for tilt shift which would prevent this issue.

Curious to see your result

https://www.icloud.com/iclouddrive/02e_R3PxPC69ZIEptQcftoxRA#camera_match

-

Hi smckenzie,

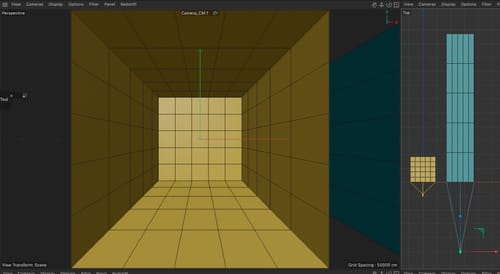

Here is the best I can do manually. There is no option to get more from it.

https://projectfiles.maxon.net/Cineversity_Forum_Support/2025_PROJECTS_DRS/20250215_CV4_2025_drs_25_CMcp_01.zip

Since it was cropped, I have two render settings, and the Main Camera is for the smaller and 3:4 or, if you will, 0.75:1 ratio.

I miss a lot here, and more trial and error might get you the best match.

In 2006, I gave a week-long class in San Francisco about this subject to production Artists. While missing any automatic systems back then, my suggestion was to find the POI (center of the image) and the POV (camera point of view), then set the camera to "P.Y" and "P.X" and the POI to "P.Y" and "P.X" as well. Here, the camera's "P.Z" is the key to moving. It is good when the Focal length /sensor is known. If so, move the camera to "P.Z" until the project matches the set survey data. If the image is cropped, it is unclear where the POV is, or if it is adjusted for perspective distortion [sic], that is gone as well.

I have set up an XPresso so the CM (Camera Match) can be moved, and the field of view can be adjusted accordingly.

.

Here is a little "shopping list", for those shots.To first auto-correct this image is the wrong idea, as it takes even more information away and often adds complexity to the perspective. To define the POI/POV and the Focal length from this cropped and (Post?) adjusted image. I assume it is cropped, as 1:1.65 is rare.

There is just too little information, no set survey, no focal length, and the image format (ratio) is nothing I'm aware of, not that I know all cameras, but it is also not vertical HD.

If this is an un-cropped image, I would say with some certainty that the optic and the sensor are not aligned (Axial) in both directions, vertical and horizontal. Indicating typically a small sensor. I wouldn't go as far as a phone image, but close.

The reproduction of that room was done with a rectangular wide lens, which corrects the lines to be straight. This means it mostly takes the information in the Z direction: Which is the first problem.

To have no data of anything from the lens is the next, Something tells me it was a 24mm but I wouldn't wonder if it goes even to a 17mm (Assuming a full-frame sensor (24mmx36)

A missing part of the image (the red cover) is the next problem; the closer to the image data is, the more precise it is, exactly that data is gone.

In short, for any of such works, never correct the image, never crop, but take an image with the focus distance of a rectangular grid, to see if there is any lens distortion. Focus distance, as Still lenses often breathe, and a close focus lens grid is a waste of time, as breathing changes the size.

.

I'm happy to look into it if you have sufficient set data and an untouched image.Cheers

-

Thanks for your efforts here, I study what you did.

For some context.

This image is part of a visualization course in 3DSMax and in this case to recreate this scene. The whole point is to be able to match an image without known information like a floorpan, focal length and so on.

The first stage was to find the vanishing point and ensure it is in the middle of the image. To do this crops are added to the image to make sure the vanishing point is in the middle via rulers in Photoshop set at 50%. It seems if the vanishing point isn't in the middle the perspective match won't work correctly.

After that we have a grid overlay where each ground plane square is half a meter. So with this an approx distance can be calculated on the floor. Lastly, we try to find something in the scene that has a known size. In this case it could be the bar height which are often around 104-107cm or so according to Google. Bar stools are also often at a standardized height as well.

Where 3DSMax makes this process easier is in the perspective match tool. Once the image is in the viewport you can simply use the tool to drag the ground plane to where it should be. From there create a cube that matches the dimensions of something in the scene that you identified earlier, in this case the bar. So say a cube 104cm and 600cm wide. Using the distance and vertical adjustments of the perspective match tool, you can line up your cube with the bar until they match. Add a camera and set its height to around 120cm and turn on Auto Correct Vertical Tilt and that's it.

As I prefer using Cinema (I'm on a Mac) I was trying to camera match it with C4D and I found, like you, the process harder to do with mixed results. The focal length in your version of the scene is 90mm which would be unlikely. I have a 19mm and 45mm Tilt/Shift for work and the 45 would be way too tight for this shot, even if the images were stitched vertically.

When using the Camera Calibrator in C4D the focal length varied a lot, anything from 35 to 300mm lol. It seemed the vertical grid lines (y) were the culprit. Adding an extra line could reduce or increase the focal length. I also found it hard if impossible to get the vertical grid lines in the calibrator to be perfectly straight and they can't be snapped it seems. I'm guessing this one of the reasons why the pitch and roll of the camera co-ordinates wouldn't be at 0 and you can't adjust them.

I've included some screenshots so you can see what I'm referring to

https://www.icloud.com/iclouddrive/02cb5R_LhEHeotDRFQOAkTwtQ#Camera_Match_Screenshots

-

Hi smckenzie,

I pointed out that it could be a 17 or 24-mm lens. I'm aware of tilt-shift lenses, and my collection of lenses is a Canon L - TS 24.

I have all of that discussed in around 200 tutorials here:

https://youtu.be/qUQLeV6sATo?feature=shared&t=176This is pretty much what I shared: finding the POI/POV and then adjusting the distance while defining the Focal length.

Finding the precise camera position is m the target, not an eyeballed adjustment; while I shared the steps with you on how eyeballing could work, you missed seeing that I went in the direction you shared.

However, if not accompanied by more data, the image you provided has very little to go on. Speaking of precision. You could change the focal length and the distance and get matching vanishing lines anyway. Sorry to be controversial here. Take a look at any of the images you shot from architecture; how many lines up like a central perspective? Very little, and any resource I had during the past decades of matching cameras, tells me not to alter the original image, not correct it, and surely not crop it. If that is what 3D Max tells, then that is sad. If the option makes it easy to find a matching camera, then I'm questioning the outcome for pro-level work.

Go slowly to frame zero with the slider while the CM render settings are on and the Atom Array is active. Yes, it changes, but the Vanishing Lines are not. There is a lot of guessing without knowing what dimension the elements have.

Same file as above, but the CM rendersettings are on and the CM camera is active.

CV4_2025_drs_25_CMcp_01_CM_camera.c4d

So, if you matched this "cage" to the scene with the time slider, open the one below and do the same

CV4_2025_drs_25_CMcp_02_CM_camera.c4d

The "cage" is matching, but anything is completeley different. Here is the weakpoint that this technique has, if one has no data. Then it becomes blurry. In other words not acceptable. I tried to explain it, above, and here again. I hope it is communicated now.There is too much eyeballing and too little set survey in the way they teach it. I wouldn't use it as prime way, which would be expected when precision is needed.

Yes, the Camera Calibrator can't solve this one, as it has no data to rely on.

The vast differences you get are exactly what I tried to explain with the animated camera in the scene above. Of course, it is not 90mm. I stated that in the text above

"To have no data of anything from the lens is the next; something tells me it was a 24mm, but I wouldn't wonder if it goes even to a 17mm (Assuming a full-frame sensor (24mmx36)."Sorry that you ignored all of that.

Good luck.

-

I appreciate all your help

My response wasn't a criticism of what you had done, far from it, instead I was just relaying the techniques our tutors are using on trying to figure and model 1 point images without information.

This video shows how they accomplish this in 3dsmax.

https://www.icloud.com/iclouddrive/08d0uPXfm0As-0iPCNPZHMbIg#ONE_POINT

-

Thanks for the reply, smckenzie,

I hope I didn't sound critical either. My wish is that things work as they should. Those techniques become an argument when parts are missing. But whether it is possible to demo it or not is my concern. I will provide you with the simplest way I can think of.

I don't have to say anything about the tutorial, just have a look at this file:CV4_2025_drs_25_CMzm_01.c4d

I could have sync'd the camera and the cube animation more, but compare the first and the last frame.As you can see, the result from the camera looks the same; even the Cube is changing its size dramatically over time. I hope that makes clear that some data is needed to define a single camera and dimension for the object pairing in a central perspective. Anything else is wild guessing and useless for professional use. The scene shows it so clearly that I have trouble finding something simpler.

.

.Thanks for sharing this clip. I hope I have clarified that point and put the idea of the clip to rest.

As you noticed, there is not a single time the focus length is mentioned, but we all know that every image has a specific result from a specific constellation. Hence, the content is dangerous to use for professional settings. Here I said it, but I proved it with the file above.

Again, I'm not interested in being right; my main concern is that everyone here is provided with information that makes them look good. I would rather go the extra mile than have that "get away with something" attitude. That is not me.

I will check how far we can get if you have some data from the shot above. I love to do those things.

Enjoy your weekend

-

Summary:

Why correct "set survey data" is crucial,

while often completely ignored or misunderstood.Here is a 17mm and 90mm focal length comparison that creates the same result, while the object has a different depth.

In other words, if little information is available, the resulting idea of what camera was used to create one or the other render with identical Perspective lines will produce complete nonsense.

Hence my long discussion.Click to activate one or the other camera. I have color-coded both. It is easy to see why no information will lead to weird ideas about setup.

I have done my first camera match over 30 years ago, for a larger architecture project of mine, in Photoshop 2.5. , Since then I have done a lot of those.

Enjoy