Motion Tracking Problems

-

Hello there

I use Cinema's motion tracking tool for my thesis.

Unfortunately, a few small problems occurred.I have a video attached so you can get an idea of what I mean.

My problem is that the keyframes at the beginning of the video don't track, then track reasonably well.

What can I do about it, where and what do I have to change to get a better result?Many thanks for the help!

Here is the WeTransfer-Link with the videos of the "error":

https://we.tl/t-OFVZTfqWx9 -

Hi climate-court,

Two things become instantly apparent: you have no lens distortion profile provided, even though the lens seems relatively wide. The other point is that the parallax in the video is relatively small, more like a pan with a tiny "dolly-in".

You left the Clouds tracker in; that should always cleaned up. The rule of thumb is that anything that moves for the camera evaluation has no positive influence. Besides, only close-by tracker features are represented with plenty of pixels, and far-away features are not as usable.

I can't put my finger on it, but it feels like a little "Rolling Shutter" is in the footage. Is it perhaps that the compression-algorithm used for the screen capture to show a video on a screen creates or even doubles the effect? All in all, using a second camera to document a video problem is not a source I can rely on.

Take the camera you have used and shoot a Lensgrid in the distance of the most used features in the final footage. If the Lens grid is shot too close, "lens breathing" will create false data.

Take some measurements from the set; moving the camera around will not help much.

Set the lens to the focal length (view angle) you used, and measure the field of view. Most lenses provide a focal length that is NOT true.Rule of thumb here: if not calibrated manually (AKA Cine Lenses), the printed focal length might vary greatly: Too much to rely on!

Get the exact size of your sensor; if it is given as "1/3 inch", that is not the size; that is an old measurement of the circle (Tube) around old options and just wrong. It should be in millimeters.

If you can re-shoot, have a foot (-leader) and tail (-leader) with more parallax in the clip. This will help the central part a lot.

Use the Motion Tracker Graph View to see if you have at least a dozen green lines for each frame. Commonly, people might tell you less is enough, but those features must be 100% accurate. Without Lens-profile, that will not happen, except perhaps with Master Primes or similar optics.

Set manual trackers on the frames that do not track

You need to evaluate each tracker feature to see if it is a good tracker or perhaps a false "good" one.

Here are a few tips

https://youtu.be/bG8NxV_TWOQ?si=YvQ9SC2INvEDLIVWSince you write your thesis, I assume you always require more about your sources. Here we go:

Why do I dare to post tracking tips?

I started learning motion tracking around 25 years ago. Sadly, most tracking training is gone on the FXPHD site, where I took at least 15 courses about tracking.

Besides that, Tim Dobert's book is (2nd edition; I am waiting for a third one); as before, my best tip is to read it if you want to get deeper into it. If you have access to the three Gnomon School DVDs or streaming of Tim's content (I was in the studio in SF, CA, where the content was shot; he is the master in tracking.)

When Dr. Steve Baines started to develop the algorithm you use in Cinema 4D, I met him in 2005 in London, where he explained his approach. I hope that doesn't sound like bragging, but I want to give you confidence in my long text.

All the best

-

Hello @Dr-Sassi,

Thank you very much for your quick and detailed answer!

How can I save a lens distortion profile?

Unfortunately, I don't understand your point about the parallaxes because I'm not that deep into the subject.

How do I turn off the cloud tracker? Can I also solve this with a mask?

Unfortunately the footage is final, I can re-film it and improve it...

Sensor size and other data are available, I filmed with a GoPro Hero 10, in linear mode in 4:3 format. I then made optical compensation in AE. Is that smart, or should I leave the fishey and track with it?

What can I do to prevent the individual track points from “floating”?

The problem was also that I tracked manual points, they sat perfectly, but then "floated" after the solve process.

Thank you very much again!

Best,

Georg -

Hi Georg,

The GoPro Hero 10 has a lot of in-camera stabilization in it. Which means it is not a 1-to-1 representation. (I have only an older GoPro, so my experience is not with a GoPro 10)

The procedure to create a lens profile is described here: https://help.maxon.net/c4d/2024/en-us/Default.htm#html/TOOLLENSDISTORTION.html?Highlight=lens%20profile.

Use only the data you get directly from the camera. No adjustments other than color.The mask is an option to exclude moving objects, but it has no option to retrieve spatial information.

Tracker points will be created for contrast areas; if it does not match all other's movements, delete it.

Parallax is the "perspective" change in the image. The opposite would be footage from a lock-off (Tripod) footage, which doesn't provide data on the space. Also, just paned (and similar movements), and zoomed footage doesn't provide parallax. (Yes, I'm aware that GoPro has no zoom options)

Points that are manually tracked should sit on non-changing features. (I mentioned that in my YouTube link.

Camera Motion Tracking creates a 3D point representation of the stable world in front of it. Once solved, that "cloud" is static.

The idea of tracker points is triangulation. Lens distortion leads to variation in the speed at which these points move. This disables the precision of a stable triangle or leads to a useless result.It is possible to track purely with manual points. However, if the footage and lens profile are not pristine, the rule that 8-12 tracker points will most likely not work.

With post-processing already in-camera processed material, you have two layers on top of problematic footage. In other words, the perfect camera/lens features are based on Global Shutter (not rolling shutter), while no stabilization is used, and the lens has minimal distortion. GoPro is not the camera for this work. Footage with no motion blur (and no added motion blur) is preferable for this work.

Since you mentioned After Effects, try to find the spatial camera path there, then merge that tracked camera in a Cineware file that allows you to get the result into Cinema 4D. (I haven't done it in a while, so this is brainstorming.

I'm sure that you went through this material, but I want to make sure you have the following:

https://www.cineversity.com/vidplaylist/motion_tracking_object_tracking_inside_cinema_4d/All the best

-

Hello @Dr-Sassi,

sorry for the delay!

The lensprofile fixed my problem, thank you so much!!!!BUT: Now I want either render my footage wit hthe correct distortion OR render my tracked objects with distortion to match it the footage. I found out about the technique to "de-distort" the image, but this only works using Standart renderer, I am using Octane. When I switch vom Standart to Octane the "Lens Distrotion"-Effekt disappears. What can I do to use my Lens profile in Octane?

Thanks

Best,

Georg -

Hi Georg,

The workflow is easy to explain but needs some work to apply.

The practical camera image contains distortion, and the idea is typically that this needs to be left untouched.

There are two options to adapt the rendering to the camera image.

The first is based on a setting in the Standard Render called Lens Distortion.

https://help.maxon.net/c4d/2024/en-us/Default.htm#html/VPPHLENSDISTORT.html

The created Lens Profile, which was used to track the footage, is applied here. This is then typically ready to be composite.

The care that was put into the Lens Profile will be shown here. Typically, only barrel and cushion distortion is mentioned, but some lenses produce a mixture of both; often, the center is different from the frame.

This is not avaialble for Redshift 3D.The second one relates to Redshift and is based on specific information from the Compositing app called ST-Map. Think of it as a UV color-based map, which can be created based on linear gradients placed (add) on top of each other.

https://help.maxon.net/r3d/cinema/en-us/Default.htm#html/Lens+Distortion.html?

For example, those maps are industry standards and easy to produce in NUKE (The Foundry).

To create your own, one can use the Standard Render. It needs to be strict in Linear workflow, and the two Gradients need every element in the Gradient to be set (color knot) as well to linear.This would lead to a source that captured the camera image. In other words, it does not address the requested "padding". If that process is applied with the non-padded Lens Profile, the information will not match.

Now, it will get a little bit more complex.Padding the Render Frame

The camera framing and the lens distortion are all in the practical footage. We would like to add padding; the lens distortion needs to be created on the Partial Footage plus the Padding. This enables us to use the padding with assumed lens distortion, as we do not know what the padding would have. This padding has to be done frugally, meaning only as much as needed.

This padding is done by increasing the resolution of the rendered image, which would do very little if the camera's field of view is not adjusted. The sure thing we know about the field of view is that it will be wider.Calculating the new field of view is a math (Trigonometry) problem defined by the new result (Camera + padding on the left, right, top, and bottom). In short, if we crop the new and larger image to the camera resolution, the effective field of view (AKA Focal Length) will match the camera footage if the ST-Map was used in the RS Camera with the larger resolution (padding). I would suggest adding the padding so the aspect ratio of the footage stays the same.

If the camera has a fixed lens value, it can be done with a short calculation and setup, but if the field of view varies, XPresso is the way to go.

The previously rendered UV/Gradient map can go into the RS camera.With this padding, the missing parts of the frame are filled.

If you would like to see that integrated into a Workflow, please request this with the "Share Your idea". I have done so pretty much every year.

https://www.maxon.net/en/support-centerAll the best

-

Hello @Dr-Sassi,

is there any chance we can have a talk?

Unfortunately, the problems I had at the beginning are reoccurring, even though I thought I had solved them. Unfortunately, I'm at the end of my know-how and would hate to abandon this project.Could you do me a favor and try to track this footage? it's the raw footage, unedited, maybe they have a way.

https://we.tl/t-N1WyZ58O54

many thanks for your help

-

Hi Georg,

Thanks for the footage; that helps point out a lot of things.

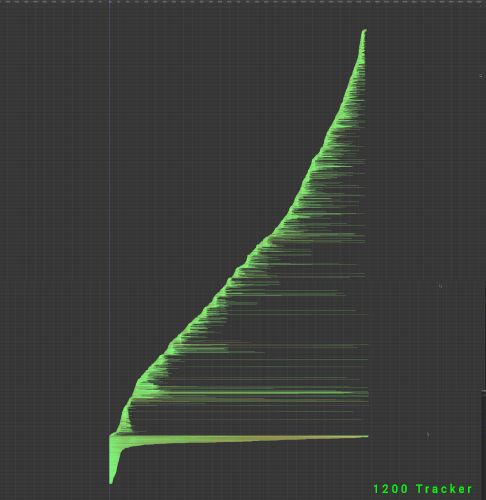

This is far from perfect, and the best I can do in a few hours. It was a lot of manual work on the F-Curves.

I hope you have the footage in RAW, as MP4 is the least used format for camera tracking. Simply put, it is based on motion detection to optimize the result, which defeats the purpose of motion tracking. If the algorithm cannot keep up, you will find spikes in the curve. There is more that isn't working. I will share what I found and the main points that I would expect to know in a typical pipeline (Material and information)https://stcineversityprod02.blob.core.windows.net/$web/Cineversity_Forum_Support/2023_PROJECTS_DRS/20231207_CV4_2024_drs_23_CTls_01.c4d.zip

You might check After Effects 3D tracking and merge the resulting camera into Cineware. But that is outside of my scope here.

Going by what I have shared so far, I feel we need a different

approach: Read Tim Dobbert's book, 2nd edition.For every camera/lens/resolution setup, a lens grid must (!) be shot. No excuse, no exception. If you like to have quality, there is no shortcut.

The exact focal length and sensor size need to be known.

Suppose your camera has a rolling shutter, which I feel your camera has; forget fast movements, like running around, which is impossible. It would help if you had a global shutter for that.

To have zero measurements in it, the results can't be calibrated, and anything you put into the shot is pure guesswork, not how it should be.

The set survey is not shot with the camera in use; it is done with sketches and measurements.

A storyboard, or at least a little description of what is needed and what elements will be in the shot. If just flying dragons, things are much easier than a race-car moving over uneven ground.

All these things are standard information in production.

If no lens/distortion grid is available to "un-distort by feeling" will not help and should be avoided, as it lowers the pixel precision without improving anything.

Here is my process if I get something like this:

I set up a lot of trackers, every 50-100 frames new.

I delete all trackers that are reflective of otherwise useless. (As mentioned in a link above)

The upper half is not helpful in your footage, so I masked it.If the camera has any smoothing or stabilizing functions, forget tracking. The image is distorted. It's the end of the story, or someone must invest endless time to get it done.

You need a camera with a Global Shutter if you like to have fast and abrupt movements.

Some tipsTypically, a set survey is needed, some kind of measurements, and something that allows you to understand your topology. The location in your footage has a natural floor, not even. I would expect to get a little clue about what you need, where to place 3D objects, or if they do not touch the floor. If the floor is uneven, randomly placed objects might slip a lot. Hence, the request is to know where and what needs to be placed on the scene. Sometimes, the surface "Reconstruction" might be helpful.

In short, the more you communicate about a target with your project, the more people can help you. I understand that when this is new, it might create some impatience, but I have no remedy for that.

I love the forum's work. Solve it once, and help many.

I might have repeated things here, as I thought it was needed.

All the best

-

Hello @Dr-Sassi,

I understand, my situation isn't the best at the moment... but I'll make the best of it.

I have provided you with all the footage (https://we.tl/t-gHNrO8AdwK) that needs to be tracked. In these clips I only let objects fly through the air, so they don't interact with the ground.

I have the link for my Miroboard here (https://miro.com/welcomeonboard/akpPYzR0c0taeDlxSFduZVpGSDRSampSUHRXakhwNWQySHR3MmpnT3NhVloxcWxZbnVlTXJTa29iTTM0MFprRXwzNDU4NzY0NTIxNTUzMjgzNDIzfDI=?share_link_id=570805529948) , you can look at the storyboard there, it may not be the best in some places, but you can see the most basic things.

The only scene where I do something on the ground is the "platform" scene, where I let a train pass through (see storyboard).

I know it's asking a lot, but could you see if you can track it here? I'm just reaching my limits...

It's important not to use lens profiles so I can use the original footage and do the distortion correction in post.Thank you very much for your help!!!

-

Hi Georg,

There was accidentally some other text in this post (a book review note) that I had in my Grammar check, please ignore it and if you like, please delete the email. Thank you.

Thanks for the footage.

Please, no unknown cloud services. I'm not familiar with miro•com. I will not touch it.

If you could share the storyboard with Wetransfer, that would be cool. Thanks for the extra effort.I have checked the Bahnsteig (train station) Footage.

Please have a look here:

https://stcineversityprod02.blob.core.windows.net/$web/Cineversity_Forum_Support/2023_PROJECTS_DRS/20231209_CV4_2024_drs_23_TRbs_01.zip

I have invested quite a few hours, and the closest I can get with it is based on keeping the tracking point all in the middle area, left to right.

As Rolling Shutter is typically a vertical time difference, the horizontal use of a small strip helps to limit the problem.When you look at the walk area in the front, there are many compression artifacts, as mp4 also has the idea that "Dark Tones" do not matter so much. The Tracker believes it is information from the surface.

With this and all I have mentioned above, it is unwise to take a lens distortion map from any footage when so many artifacts are included, but I did it anyway to get anything done.To say that you don't want to work with any treatment of lens distortion is just not an option. End of the story -- sorry, no discussion when a lens with distortion is used, like this one here. In the mix with rolling shutter, stabilization (best guess based on the spikes in the F-Curve), mp4 artifacts (blocks and motion)

Since I write in a forum, I share details that anyone reading along should have.

This is not the first time in my over 17 years running this forum to get a request for help after the shooting is done. This is not how it works. The blueprint phase is where the storyboard is done, and from there, all people who could help brainstorm about it. This is the cheap phase, where nothing is set in stone, and things can be designed to work well.

Yes, I get that the lens-distortion workflow is not simple, and quite frankly, while we talk along a whole pipeline, that needs some knowledge. Red Giant has a solution for many apps, but all for 2D work.There is also not a lot of correct data about the Lens-distortion profile and Padding available. If the image is rendered larger, the camera must be adjusted so a wider field of view correctly produces the extra pixels. But, and this is super important, the Lens-distortion profile used for the un-padded image can't (!) be used for the padded version, as it would be applied to the larger image and would not work correctly for the original image area.

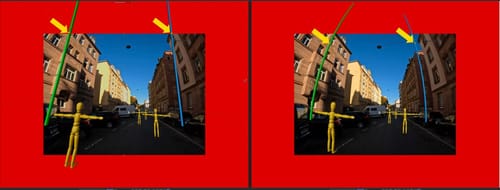

In your case, the scene needs to be more significant as the needed distortion for the 3D rendering makes things smaller. The 6144-pixel wide suggestion is for the train station, as even 6K does not cover the corners completely. Those Padding workflows are (again) not simple and need care. See the image below; the red area is the Padding here. (Why always these screaming colors? So it is not accidentally overlooked during deadline stress)

Let this all sink in for a while. I believe it klicks at one point, and then it is fun to do.

Sometimes, it might be useful to set this up as camera mapping, especially with the train station, As the train running through will cover a lot and take all the attention.

One tip, if there is nothing in the scene itself that moves, move the camera much slower; the "factor of slower" is pretty much the "factor of less Rolling Shutter".

Here is a little example, since it is late, I stopped for the last few seconds the train.

https://stcineversityprod02.blob.core.windows.net/$web/Cineversity_Forum_Support/2023_Clips_DRS/Bahnsteig_2b.mp4This was a long day, close to midnight here in L.A., and a Saturday. I call it a day.

All the best